October 14, 2010

I want to see what you see: Babies treat “social robots” as sentient beings

Babies are curious about nearly everything, and they’re especially interested in what their adult companions are doing. Touch your tummy, they’ll touch their own tummies. Wave your hands in the air, they’ll wave their own hands. Turn your head to look at a toy, they’ll follow your eyes to see what’s so exciting.

Curiosity drives their learning. At 18 months old, babies are intensely curious about what makes humans tick. A team of University of Washington researchers is studying how infants tell which entities are “psychological agents” that can think and feel.

Research published in the October/November issue of Neural Networks provides a clue as to how babies decide whether a new object, such as a robot, is sentient or an inanimate object. Four times as many babies who watched a robot interact socially with people were willing to learn from the robot than babies who did not see the interactions.

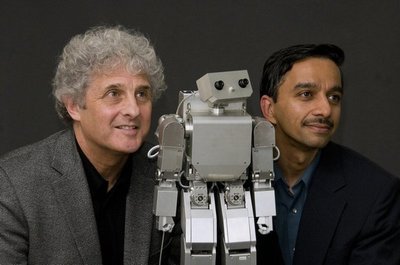

“Babies learn best through social interactions, but what makes something ‘social’ for a baby?” said Andrew Meltzoff, lead author of the paper and co-director of the UW’s Institute for Learning and Brain Sciences. “It is not just what something looks like, but how it moves and interacts with others that gives it special meaning to the baby.”

The UW researchers hypothesized that babies would be more likely to view the robot as a psychological being if they saw other friendly human beings socially interacting with it. “Babies look to us for guidance in how to interpret things, and if we treat something as a psychological agent, they will, too,” Meltzoff said. “Even more remarkably, they will learn from it, because social interaction unlocks the key to early learning.”

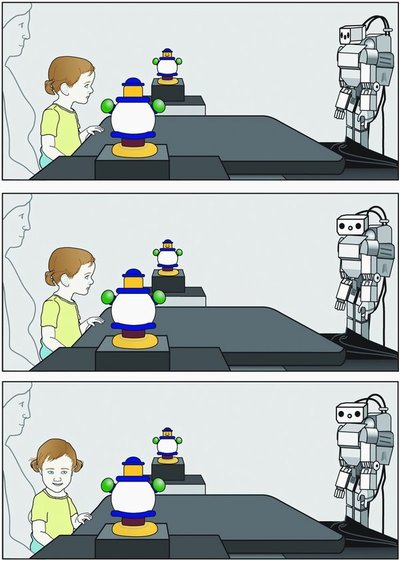

During the experiment, an 18-month-old baby sat on its parent’s lap facing Rechele Brooks, a UW research assistant professor and a co-author of the study. Sixty-four babies participated in the study, and they were tested individually. They played with toys for a few minutes, getting used to the experimental setting. Once the babies were comfortable, Brooks removed a barrier that had hidden a metallic humanoid robot with arms, legs, a torso and a cube-shaped head containing camera lenses for eyes. The robot — controlled by a researcher hidden from the baby — waved, and Brooks said, “Oh, hi! That’s our robot!”

Following a script, Brooks asked the robot, named Morphy, if it wanted to play, and then led it through a game. She would ask, “Where is your tummy?” and “Where is your head?” and the robot pointed to its torso and its head. Then Brooks demonstrated arm movements and Morphy imitated. The babies looked back and forth as if at a ping pong match, Brooks said.

At the end of the 90-second script, Brooks excused herself from the room. The researchers then measured whether the baby thought the robot was more than its metal parts.

The robot beeped and shifted its head slightly — enough of a rousing to capture the babies’ attention. The robot turned its head to look at a toy next to the table where the baby sat on the parent’s lap. Most babies — 13 out of 16 — who had watched the robot play with Brooks followed the robot’s gaze. In a control group of babies who had been familiarized with the robot but had not seen Morphy engage in games, only three of 16 turned to where the robot was looking.

“We are using modern technology to explore an age-old question about the essence of being human,” said Meltzoff, who holds the Job and Gertrud Tamaki Endowed Chair in psychology at the UW. “The babies are telling us that communication with other people is a fundamental feature of being human.”

The study has implications for humanoid robots, said co-author Rajesh Rao, UW associate professor of computer science and engineering and head of UW’s neural systems laboratory. Rao’s team helped design the computer programs that made Morphy appear social. “The study suggests that if you want to build a companion robot, it is not sufficient to make it look human,” said Rao. “The robot must also be able to interact socially with humans, an interesting challenge for robotics.”

The study was funded by the Office of Naval Research and the National Science Foundation. Aaron Shon, who graduated from UW with a doctorate in computer science and engineering, is also a co-author on the paper.

###

For more information, contact Meltzoff at 206-685-2045 or meltzoff@u.washington.edu, Brooks at 206-616-6107 or recheleb@u.washington.edu, or Rao at 206-685-9141 or rao@cs.washington.edu.